Human Translators Are Still on Top — for Now

Machine translation works well for sentences but turns out to falter at the document level, computational linguists have found

By Emerging Technology from the arXiv

You may have missed the popping of champagne corks and the shower of ticker tape, but in recent months computational linguists have begun to claim that neural machine translation now matches the performance of human translators.

The technique of using a neural network to translate text from one language into another has improved by leaps and bounds in recent years, thanks to the ongoing breakthroughs in machine learning and artificial intelligence. So it is not really a surprise that machines have approached the performance of humans. Indeed, computational linguists have good evidence to back up this claim.

But today, Samuel Laubli at the University of Zurich and a couple of colleagues say the champagne should go back on ice. They do not dispute their colleagues’ results but say the testing protocol fails to take account of the way humans read entire documents. When this is assessed, machines lag significantly behind humans, they say.

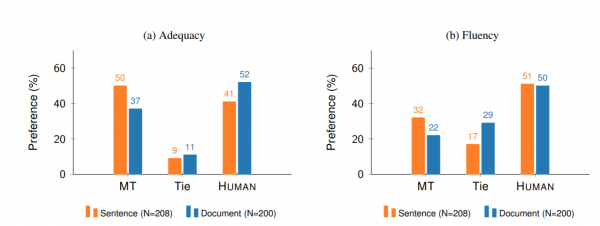

At issue is how machine translation should be evaluated. This is currently done on two measures: adequacy and fluency. The adequacy of a translation is determined by professional human translators who read both the original text and the translation to see how well it expresses the meaning of the source. Fluency is judged by monolingual readers who see only the translation and determine how well it is expressed in English.

Computational linguists agree that this system gives useful ratings. But according to Laubli and co, the current protocol only compares translations at the sentence level, whereas humans also evaluate text at the document level.

So they have developed a new protocol to compare the performance of machine and human translators at the document level. They asked professional translators to assess how well machines and humans translated over 100 news articles written in Chinese into English. The examiners rated each translation for adequacy and fluency at the sentence level but, crucially also at the level of the entire document.

The results make for interesting reading. To start with, Laubli and co found no significance difference in the way professional translators rated the adequacy of machine- and human-translated sentences. By this measure, humans and machines are equally good translators, which is in line with previous findings.

However, when it comes to evaluating the entire document, human translations are rated as more adequate and more fluent than machine translations. “Human raters assessing adequacy and fluency show a stronger preference for human over machine translation when evaluating documents as compared to isolated sentences,” they say.

The researchers think they know why. “We hypothesise that document-level evaluation unveils errors such as mistranslation of an ambiguous word, or errors related to textual cohesion and coherence, which remain hard or impossible to spot in a sentence-level evaluation,” they say.

For example, the team gives the example of a new app called “微信挪 车,” which humans consistently translate as “WeChat Move the Car” but which machines often translate in several different ways in the same article. Machines translate this phrase as “Twitter Move Car,” “WeChat mobile,” and “WeChat Move.” This kind of inconsistency, say Laubli and co, makes documents harder to follow.

This suggests that the way machine translation is evaluated needs to evolve away from a system where machines consider each sentence in isolation.

“As machine translation quality improves, translations will become harder to discriminate in terms of quality, and it may be time to shift towards document-level evaluation, which gives raters more context to understand the original text and its translation, and also exposes translation errors related to discourse phenomena which remain invisible in a sentence-level evaluation,” say Laubli and co.

That change should help machine translation improve. Which means it is still set to surpass human translation — just not yet.